By Gabriel Ameh

Consumer protection in Nigeria’s telecommunications sector has long followed a familiar, often frustrating path: a complaint is lodged, a ticket is opened, and the consumer waits.

By the time resolutif it does confidence has already been strained.

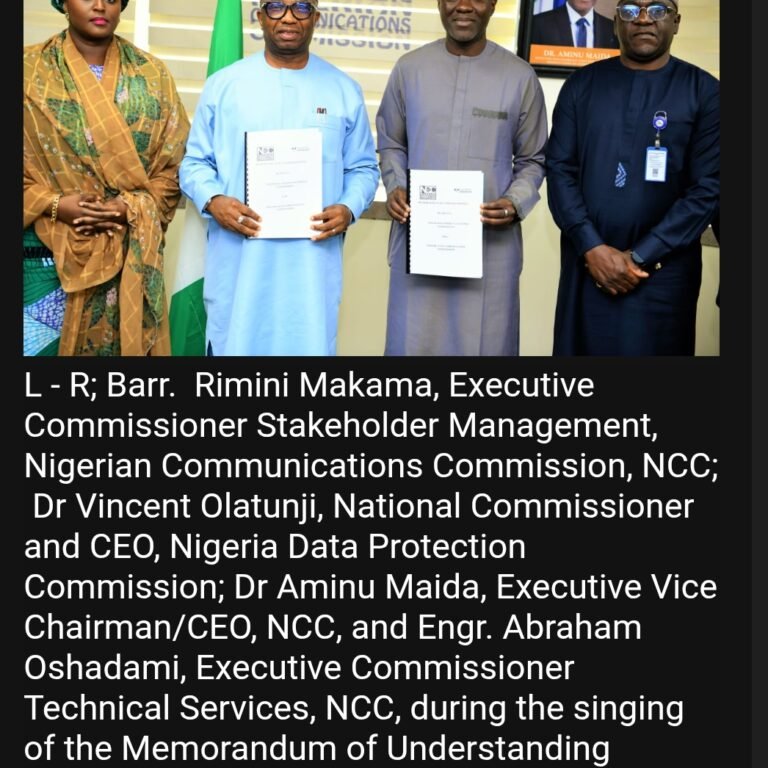

The Nigerian Communications Commission (NCC) is seeking to disrupt that cycle.

With the deployment of an artificial intelligence–driven consumer protection platform, the Commission is repositioning regulation from a reactive mechanism to a responsive, living system one that listens, anticipates, and intervenes earlier in the consumer experience.

At the heart of the platform is its ability to detect what traditional oversight often overlooks. Patterns of dropped calls, unexplained billing irregularities, persistent service outages in specific locations—issues once buried deep in reports and spreadsheets are now identified in near real time. Here, artificial intelligence is not deployed for novelty, but for attention: sustained, data-informed awareness of how consumers actually experience telecom services.

Rather than waiting for subscribers to navigate complaint channels, the system flags distress signals embedded in network behaviour and consumer feedback. A surge in unresolved issues no longer disappears into routine data noise; it becomes an immediate regulatory trigger.

Accountability is thus pushed upstream, closer to the point where service failure occurs, not weeks after damage to trust has been done.

For everyday users, the shift is significant. An institution often viewed as distant becomes more present. Responses are quicker, explanations clearer, and outcomes more consistent.

The platform’s strength lies not only in detection, but in translation turning complex technical failures into actionable consumer protection and regulatory enforcement. In doing so, it narrows the emotional and institutional gap between regulator and citizen.

Telecom operators are also adjusting to this recalibration. Continuous, AI-assisted oversight reduces room for ambiguity. Service quality trends are harder to contest when they emerge from constant analysis rather than periodic audits.

The incentive structure changes: prevention becomes more economical than correction, and compliance evolves into a competitive advantage rather than a regulatory checkbox.

Beneath the technological leap is a deliberate ethical framework.

The NCC has emphasised strict data-governance safeguards to ensure that protection does not slide into surveillance. The focus remains on monitoring systems not individuals. This distinction, quietly but firmly upheld, is central to sustaining public trust.

In an era where technology often overwhelms users, this initiative reverses the balance. Artificial intelligence is positioned not as a spectacle, but as a mediator absorbing complexity so consumers encounter fewer excuses and clearer outcomes.

Ultimately, the platform’s success will not be measured by how sophisticated it appears, but by how seamless it becomes. When consumer protection works, users feel relief, not innovation.

By choosing AI as a safeguard rather than a showpiece, the NCC is offering a glimpse of forward-thinking regulation one that listens attentively, acts early, and responds with a human touch, even when powered by code.